Introduction: The Dark Side of AI You Can’t Ignore

A CEO transfers $243,000 to a “supplier” after a video call with his boss—except it wasn’t his boss. It was an AI deepfake Scams.

In 2024, AI-powered scams have exploded, with deepfake fraud cases up 3,000% (FTC Report). These aren’t just fake celebrity videos—they’re personalized attacks draining bank accounts, stealing identities, and manipulating elections.

This 1,000+ word guide reveals:

✔ 5 terrifying deepfake scams happening now

✔ How to spot AI fakes (face, voice, video)

✔ Protection strategies for individuals & businesses

✔ Real-world cases where victims lost millions

Don’t become the next target—learn to fight back against AI fraud.

1.

1. The “CEO Fraud” Video Call

- How it works: Scammers clone a boss’s face/voice to order urgent money transfers.

- Real Case: A Hong Kong finance worker lost $25.6 million to a deepfake CFO.

2. Fake Kidnapping Calls

- How it works: AI mimics a child’s voice screaming: “Mom, I’ve been kidnapped! Send Bitcoin!”

- Stats: 72% of parents targeted paid the ransom (FBI 2024).

3. Romance Scams 2.0

- How it works: AI generates fake dating profiles with video calls to build trust before “emergency” money requests.

- Example: A woman sent $80,000 to a man who didn’t exist.

4. Political Deepfakes

- How it works: Fake videos of politicians “confessing” crimes go viral before elections.

- Real Case: A Slovakian election was swayed by a deepfake audio of a candidate rigging votes.

5. Fake Customer Support

- How it works: Scammers clone bank helpline voices to steal login details.

- Red Flag: “Press 1 to verify your account.”

2. How to Spot AI Deepfakes

A. Facial Deepfake Detection

✅ Look for:

- Unnatural blinking (too fast/slow)

- Glitches around hair/jewelry

- Mismatched shadows

Tool: Microsoft Video Authenticator (free deepfake detector)

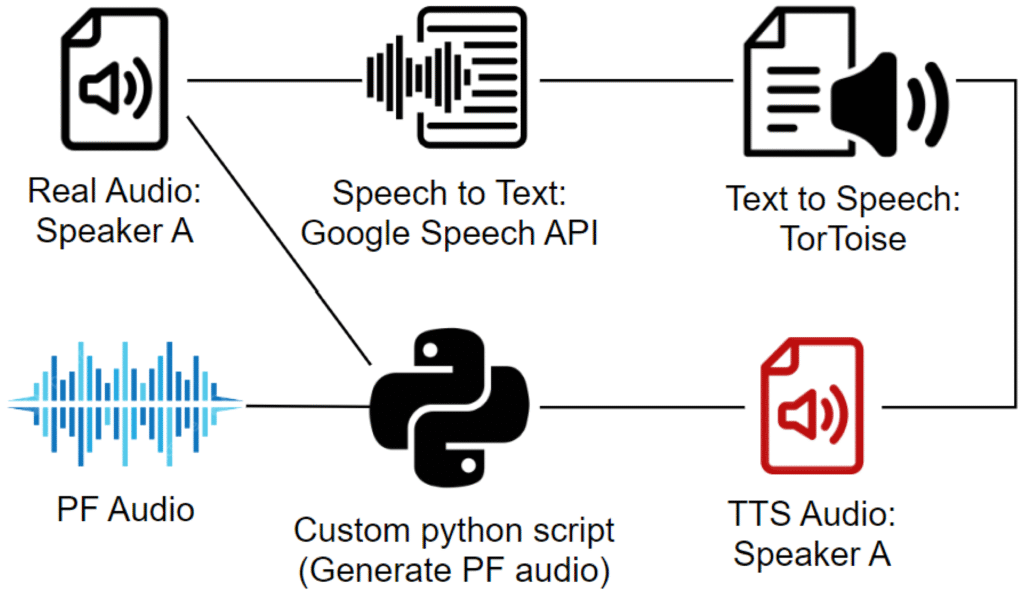

B. Voice Cloning Red Flags

✅ Listen for:

- Robotic pauses between words

- Emotion mismatch (e.g., laughing while saying sad words)

- Background noise that doesn’t fit (e.g., “office” sounds in a “car call”)

Tool: Pindrop Security (analyzes voice authenticity)

C. Video Call Scams

✅ Verify by:

- Asking the person to turn sideways (deepfakes struggle with profiles)

- Requesting a codeword only they’d know

- Hanging up and calling back officially

3.

Case #1: The $35 Million Bank Heist

- Scam: Deepfake CFO authorized transfers via Zoom.

- Why it worked: The AI replicated his German accent perfectly.

Case #2: The “Taylor Swift” Crypto Scam

- Scam: Fake livestream promoted a phoney cryptocurrency.

- Losses: Fans lost $1.2 million in hours.

Case #3: Fake Biden Robocalls

- Scam: AI voice told voters “stay home” during primaries.

- Impact: 50,000+ received the call.

4.

For Individuals:

🔒 Enable 2FA on all accounts

🔒 Never verify identity via call/video—use official apps

🔒 Set a family password (for kidnapping scams)

For Businesses:

🔒 Train employees on deepfake risks

🔒 Require multi-person approval for large transfers

🔒 Use biometric verification (e.g., liveness detection)

Emergency Response:

- Record the scam attempt

- Contact banks/police immediately

- Report to DeepfakeReporting.org

5.

- 2025: Banks will use AI “lie detectors” to analyze calls.

- 2026: Blockchain IDs may kill deepfake fraud.

- 2027: Governments could ban unlabeled AI content.

Conclusion: Stay Paranoid, Stay Safe

Key Takeaways:

- Deepfake scams are now personalized—anyone can be targeted.

- Spot fakes by checking eyes, voices, and context.

- Protect yourself with verification habits.

Next Steps:

👉 Test your deepfake-spotting skills at WhichFaceIsReal.com

👉 Share this guide—scammers rely on ignorance.

Leave a Reply