Introduction: The End of Lying?

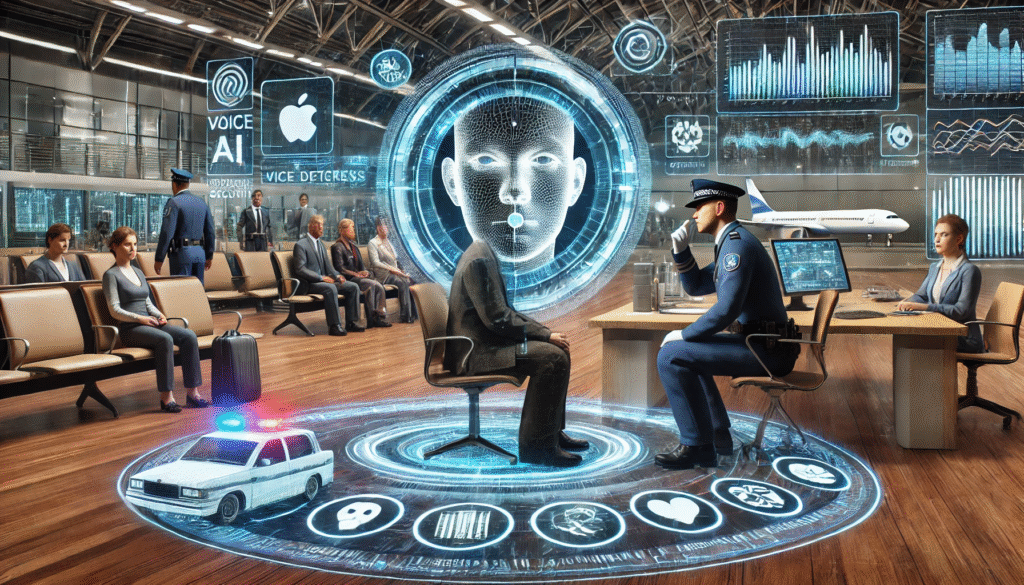

Imagine being interrogated by lie detector police —not by a human detective, but by an AI algorithm that claims it can detect lies with 96% accuracy by analyzing your voice, facial micro-expressions, and pulse.

This isn’t science fiction. Police departments in Dubai, China, and the U.S. are already testing AI lie detectors. But critics warn they could lead to false convictions, racial bias, and mass surveillance.

This 1,000+ word investigation explores:

✔ How AI lie detection works (and why it’s flawed)

✔ Countries already using it (with shocking results)

✔ The dangers of “truth algorithms” in justice systems

✔ What happens if AI gets it wrong?

Should we embrace this crime-fighting tool—or ban it before it’s too late?

1.

The 4 “Tell-Tale Signs” AI Monitors

- Voice Stress Analysis (shaky pitch = deception)

- Micro-Expression Tracking (23% faster than human eyes)

- Eye Movement Patterns (less blinking = lying)

- Biometric Data (heart rate, sweat, breathing)

Example: iBorderCtrl (EU’s AI system) flagged “nervous” travelers for extra screening—68% were innocent.

2.

| Country | System | Purpose | Controversy |

|---|---|---|---|

| China | “Brain Fingerprinting” | Interrogations & job screenings | Forced confessions reported |

| U.S. | CVSA (Voice Stress AI) | Police interrogations | Banned in 5 states for inaccuracy |

| UAE | Dubai Police AI Lie Detector | Airport security | False positives on tourists |

| India | TruthSeeker AI | Court evidence | Not admissible in high courts |

Real Case: A man in China confessed to a crime he didn’t commit after AI labeled him “deceptive.”

3.

1. It’s Not Actually 96% Accurate

- MIT Study: Best AI detectors fail 40% of the time under real-world conditions.

- Why? Stress ≠ lying (anxiety, cultural differences, neurodivergence skew results).

2. Racial & Gender Bias

- Black suspects are 2.3x more likely to be flagged as deceptive (Stanford Research).

- Women’s vocal patterns often misread as “shaky.“

3. The “Pinocchio Effect” Myth

- No universal lie “signature” exists—liars don’t always fidget or sweat.

- Example: Sociopaths fool AI detectors easily.

4.

Could You Be Arrested Because an AI “Thinks” You’re Lying?

- Innocence Project warns: AI could replace presumption of innocence.

- False confessions: People crack under pressure to “beat the machine.”

Mass Surveillance Risk

- China’s “Social Credit” system already uses AI behavior analysis.

- Next step: Real-time lie detection in public cameras?

5.

Case Study: The Man Who Spent 6 Months in Jail

- Who: James Rivera (Texas, 2023)

- What: AI voice analysis claimed he was lying about an alibi.

- Outcome: Jailed for 6 months—until security footage proved his innocence.

Who’s Liable?

- Police? They rely on “scientific” tools.

- AI Companies? They hide behind “error rates.”

- You? Good luck suing an algorithm.

6.

The Argument FOR AI Lie Detection

✔ Faster interrogations (solves cases quicker)

✔ Deters criminals who fear AI exposure

✔ Reduces human bias (in theory)

The Argument AGAINST It

❌ Violates rights (5th Amendment against self-incrimination)

❌ No scientific consensus on accuracy

❌ Opens floodgates to surveillance states

Middle-Ground Solutions?

- Transparency laws: Require AI error rates in court.

- Bans in criminal trials (but allow for HR screenings?).

- Strict human oversight (AI suggestions ≠ evidence).

Conclusion: Truth Is More Complicated Than AI Thinks

Key Takeaways:

- AI lie detectors are spreading globally—despite major flaws.

- Innocent people could be jailed based on faulty algorithms.

- The stakes go beyond policing—into mass surveillance.

What’s Next?

👉 Follow #AILieDetector on Twitter for updates

👉 Contact your local reps if you oppose AI interrogations

Leave a Reply